Robot Imitates Human Motion

Reprogramming robots to perform a task consume time and reduce the productivity of the industry process, especially in industries that undergo many changes in their operations. An old solution to this problem was to let human operator control the robot using devices like keyboard and joysticks. This solution becomes more cumbersome on the human operator as the task increases in complexity. An alternative way is to let robots learn a task demonstrated by a human expert. This way of implicit programming of robots is called Learning From Demonstration (LfD). As a first step to achieve this larger goal, the robot should be able to track and mimic the human motion.

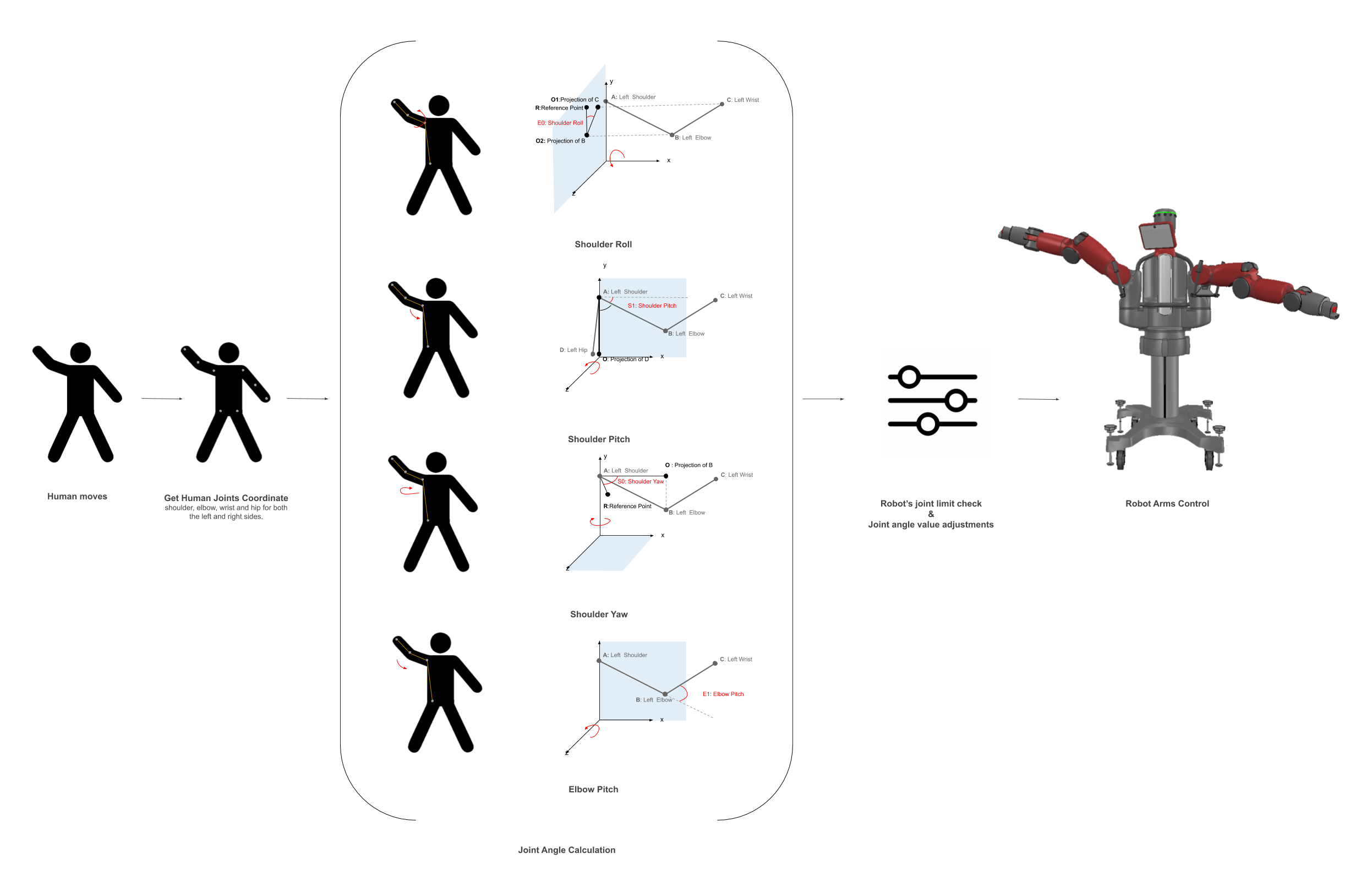

I proposed and implemented a methodology to map a human arm motion to a robot arm motion. I showcased the project at Imagine RIT 2018: Creativity and Innovation Festival, visited by more than 35,000 visitors.

How I built it

- Skeletal Tracking/Skeletal Data

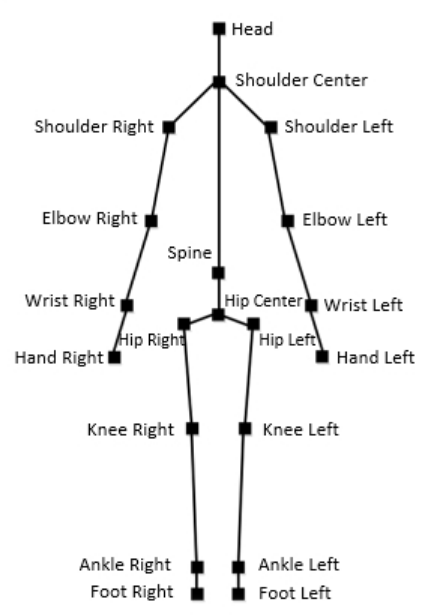

In this project Kinect V1 is used for skeletal tracking. Kinect is a low cost motion sensing camera created by Microsoft. Kinect has an RGB camera, 3D depth sensor on the front. The skeletal tracking algorithm detects the joints of the human using the RGB camera and the 3D depth sensor. It represents the joints as a point (x,y,z) in a 3D space. The skeletal data acquired from Kinect is 20 joint-points of the human being tracked. The graphic shows the skeletal joints the kinect returns.The goal of this project is to mimic the upper body movement of the human. Therefore, the focus will be on 8 joint points out of the 20 acquired from Kinect. The joint points of interest are the following: shoulder, elbow, wrist and hip for both the left and right sides.

- Baxter Research Robot / Arm joint Control

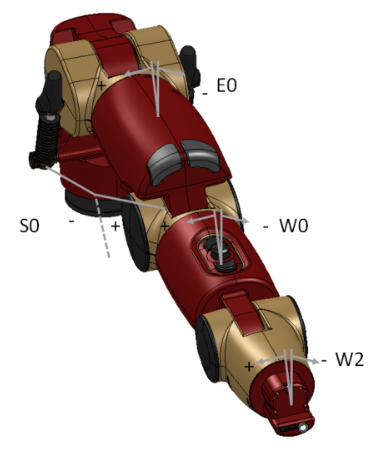

Baxter robot is used in this project. Baxter is an anthopomorphic robot sporting two seven degree-of-freedom arms. Baxter provides a stand-alone Robot Operating System (ROS) ROS Master to which any development workstation can connect and control Baxter via the various ROS APIs.

To control Baxter arms one of the following control modes can be used: Joint Position Control, Joint Velocity Control and Joint Torque Control. Joint Position Contr

ol mode is used in the project. In this mode, the desired joint angles values are specified.

Typically it consists of the following seven values:

- Shoulder Pitch: S1

- Elbow Pitch:E1

- Wrist Pitch: W1

- MATLAB

I used MATLAB to aquired Kinect data, process and to control the motion of Baxter’s arms. I used MATLAB Image Acquisition Toolbox support package for Kinect to enable acquiring kinect image sensor data directly into MATLAB.Using MATLAB’s Robotics System Toolbox, a workstation was created to control and communicate with Baxter.

Robotics System Toolbox provides an interface between MATLAB and ROS that enables the communication with a ROS network. A ROS node was created in MATLAB to communicate with ROS Master, Running on Baxter, to enable creating, sending ROS messages, publishing, and subscribing directly from MATLAB.

How it works

The system consists of three main parts: human joint positions tracking (skeletal tracking); calculating the human joint angles and mapping them to the robot arm; and controlling the robot arms.

Based on the analysis made on human arm, 4 joint angles were decided to be sufficientin describing human arm movements. The following are the four angles calculated using Kinect skeletal data: Shoulder Pitch, Shoulder Yaw, Shoulder Roll and Elbow Pitch for both left and right arms.

The angles were found based on geometric relations between the acquired joint points from Kinect. I found the coordinates of the vectors that connects two sequential points aquired by kinect and then found the lengths of these vectors :