Task Planning and Execution for Human-Robot Team Performing a Shared Task in a Shared Workspace

A true collaborative robot is expected to:

- Insure human safety.

- Be aware of human actions.

- Have decision-making ability on what it should do (selects its action) without having the human to command it to act.

- Be able to do this in real-time as the human will keep changing the environment.

How I built it

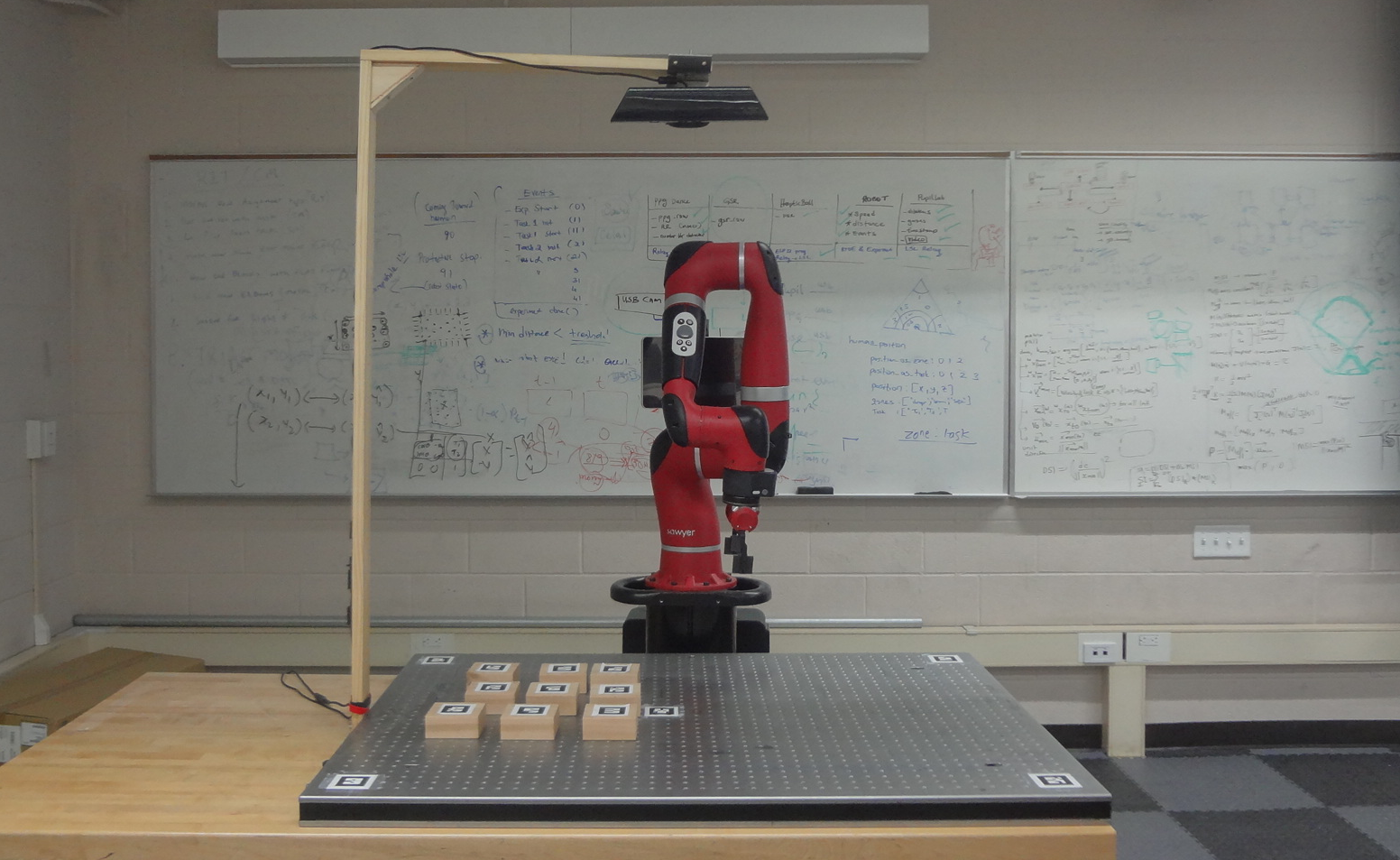

As a first step towards this research, I create a workspace for the human-robot team to collaborate. This includes the physical set up to help the robot perceive the human, the workpieces, and the environment. More information regarding the workspace can be found in Workspace for Human-Robot Collaboration.

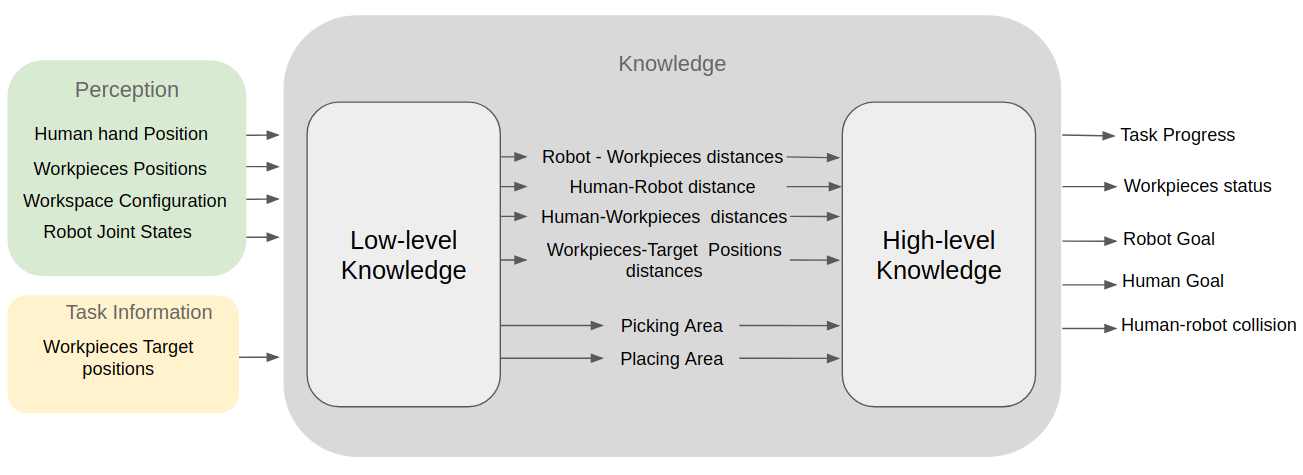

Robot Knowledge:

The human is far superior to its robot counterpart in terms of forming connections and contextualizing the data it gathers through the human senses. It is essential to compensate for the unbalance of capabilities as a first step towards studying and improving any aspect of any HRC scenario. The robot needs to have an understanding of the changes in the environment. The type of data it gathers through perception is not enough to support the process of decision making. It needs some higher-level understanding of the environment. This layer is created and shared with other modules through ROS services and ROS topics.

- Task progress: how many workpieces need to be manipulated to achieve the final task goal.

- Human goal: predicting the workpiece the human wants to manipulate based on the human hand trajectory.

- Workpieces status: a workpiece can be in one of these four different statuses: being manipulate by the human, or by the robot, in its final placement location or needs to be manipulated.

- Human-robot collision: event where the minimum distance between human and robot is below a predefined threshold.

- Robot goal: the workpiece the robot should manipulate based on its current location in the workspace with respect to the workpieces that need to be manipulated. This will change after the robot evaluates all the current state of the environment.

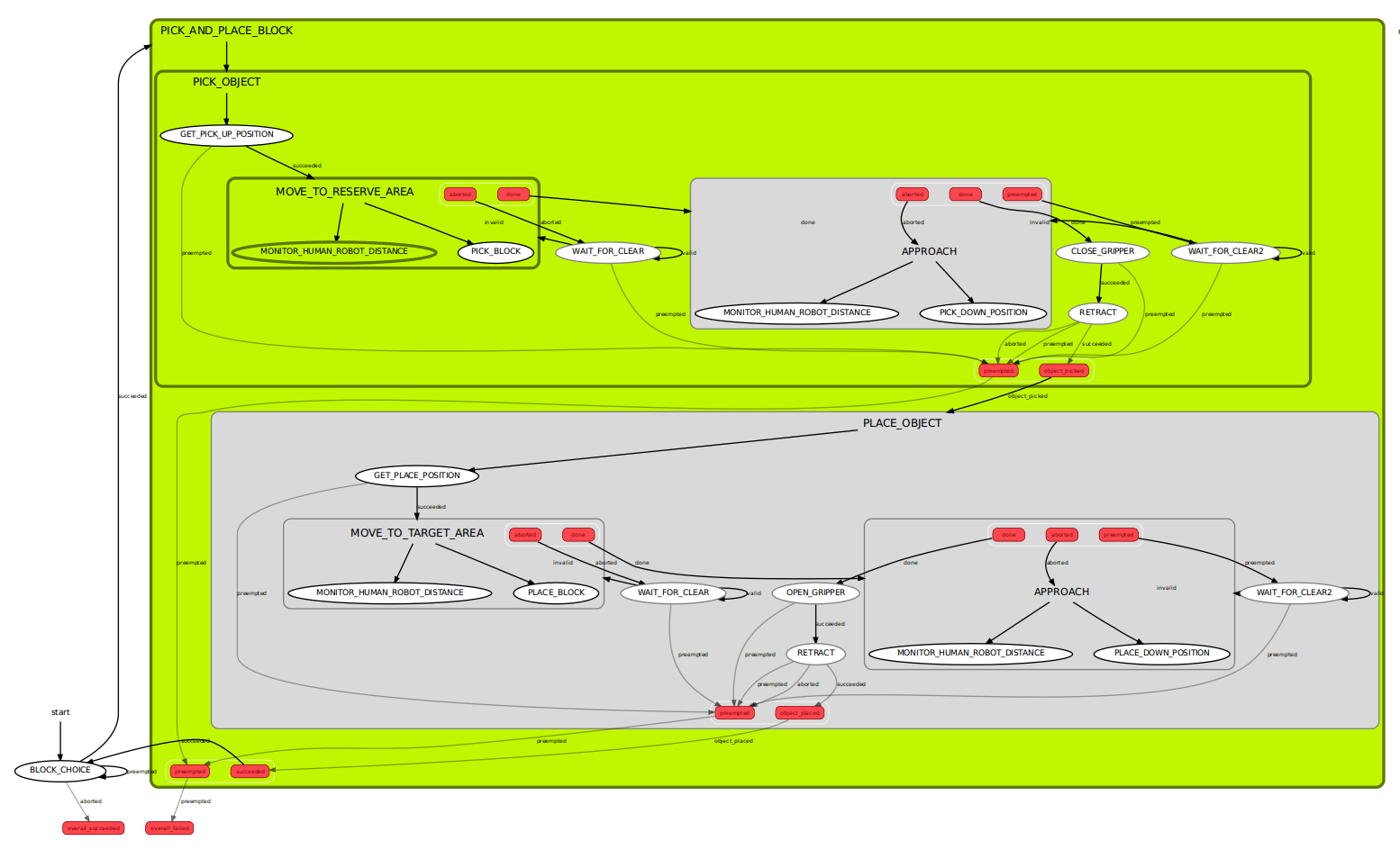

Robot Reasoning For Action Selection and Execution

Based on the nature of table-top object manipulation tasks, I divided the robot's ability to reason and select its actions in two levels: the robot reason about which object it should manipulate, and the robot's reasoning about human safety when executing a sequence of object manipulation actions. The robot needs to select and execute -on the fly- an action from a set of possible actions taking into consideration the human activity in the workspace.

- Selecting a workpiece for the robot to manipulate

- Safe action execution

User Studies

I am currently conducting more user studies to validate the developed system. I am evaluating collaboration through both objective and subjective metrics. The following explains the objective metrics of interest:

- Robot Idle Time: Percentage of time out of the total task time, during which the robot has been not active. The robot can be idle due to predefined rules to prevent the human-robot collision.

- Human Idle Time: Percentage of time out of the total task time, during which the human has been not active.

- Number of Collisions between the human and robot : (Note: Collision is defined as the event where the minimum distance between human and robot is below a predefined threshold.)

- Functional Delay: Percentage of time out of the total task time, between the end of one agent’s action and the beginning of the other agent’s action.

- Concurrent activity: Percentage of time out of the total task time, during which both agents have been active at the same time.

- Concurrent activity in the same workspace area: Percentage of time out of the total task time, during which both agents have been active at the same time within the same workspace area.

- The number of actions performed by each agent: In our HRC scenario, we count the number of workpieces manipulated by each agent.

- Time to complete the task: i.e. the time taken to place the 9 workpieces in their target locations.

To measure the participant perceived sense of robot collaboration, the participant is asked to answer a seven-point Likert scale survey.